Windows containers

What are Windows containers?

Windows containers provide abstracted, isolated, lightweight and portable operating environments for application development on a single system. These environments make it easier for developers to develop, deploy, run and manage their applications and application dependencies, both on premises and in the cloud.

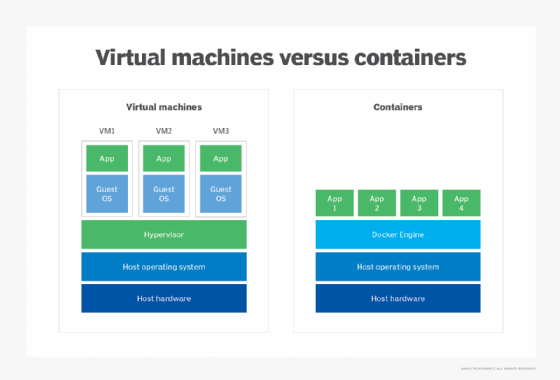

A container is a logical environment created on a computer where an application can run. Containers and guest applications are abstracted from the host computer's hardware resources and also logically isolated from other containers. Containers are different than virtual machines (VMs) because they do not rely on a separate hypervisor layer but are supported by the underlying operating system (OS).

Windows containers are useful to package and run enterprise applications. Both Windows OS and Linux OS applications are supported. Since the containers are lightweight and fast, they can be started and stopped quickly, making them ideal for developing applications that can rapidly adapt and scale up or down to changing demand. They are also useful for optimizing infrastructure utilization and density.

Windows containers are suitable for developing any kind of application, including monoliths and microservices. These applications can be developed in any language, including Python, Java and .NET, and can run across both on-premises and cloud environments.

How Windows containers work

Containers are created on a container host system that controls how much of its resources can be used by individual containers. For example, it can limit CPU usage to a certain percentage that applications cannot exceed. The host also provides each container with a virtualized namespace that grants access only to the resources the container needs. Such restricted access ensures the container behaves as if it is the only application running on the system.

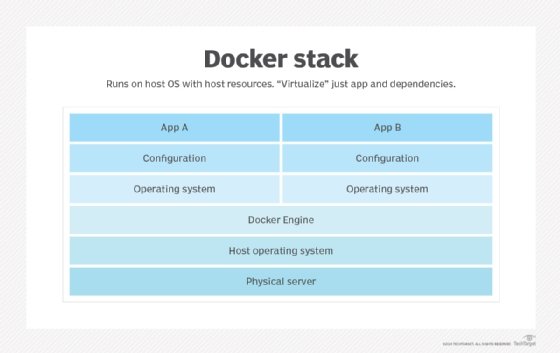

All containers on a system build on top of and share the same OS kernel, so there's no need to install a separate OS instance for each container. Each container does not get complete access to the kernel; it only gets an isolated or virtualized view of the system, meaning it can access a virtualized version of the OS file system and registry. Changes to those elements will only affect that container.

The APIs and services an app needs to run are provided by system libraries instead of the kernel. These libraries are files running above the kernel in user mode and packaged into a container or base image. Container images are required to create a container. When the image is deployed to a host system with a compatible container platform such as Docker, the application will run without needing to install or update any other components on the host.

The combination of virtualization and the container image makes containers very portable and self-sufficient. These qualities let developers create, test and deploy applications to production without making changes to the image. They can also interconnect multiple containers to create larger applications using a microservices architecture. These applications are scalable and consist of blocks or functions that communicate with each other via APIs.

Microsoft containers ecosystem

It's easy to develop and deploy containers using Microsoft's broad container ecosystem. Docker Desktop is part of this ecosystem. It is useful to develop and test Windows- or Linux-based containers on Windows 10.

All apps can be published as container images to the public Docker Hub or to a private Azure Container Registry. Microsoft tools like Visual Studio and Visual Studio Code support containers and technologies like Docker and Kubernetes to develop, test, publish and deploy Windows-based containers.

Containers can be deployed at scale on premises or in the cloud. For deployment in Azure or other clouds, an orchestrator like Azure Kubernetes Service (AKS) is very useful after the container image is pulled from a container registry. For on-premises deployment, AKS on Azure Stack HCI, Azure Stack with the AKS Engine or Azure Stack Hub with OpenShift can be used.

Windows container images

The Windows container image is a file or package that includes the container's own copy of all user-mode system files. It includes details of the application, data, OS libraries, and all the dependencies and miscellaneous configuration files needed to operate the application. The image can be stored in a local, public or private container repository.

Microsoft provides the following multiple base images that developers can use to build their own Windows container images:

- Windows. This is the full Windows base image and contains all Windows libraries, APIs and system services except for server roles.

- Windows Server. This base image contains a full set of Windows APIs and system services; it is equivalent to a full installation of Windows Server.

- Windows Server Core. This base image is smaller and contains a subset of the APIs, libraries and services included in Windows Server Core. It also includes many server roles, though not all.

- Nano Server. This is the smallest Windows base image in terms of image size and the number of libraries and services. It includes support for the ASP.NET Core APIs and some server roles.

Windows and Linux container platform

The Windows and Linux container platform includes numerous tools, such as the following:

- Containerd. Introduced in Windows Server 2019 and Windows 10 version 1809, containerd is a daemon to manage the entire container lifecycle. It can be used to download and unpack the container image and also to execute and supervise containers.

- Runhcs. Runhcs is a Windows container host and a fork of runc. The runhcs command-line client can be used to run applications packaged according to the Open Container Initiative (OCI) format and runtime specification. It runs on Windows and can run many container types, including Windows and Linux Hyper-V isolation and Windows process containers.

- HCS. HCS is a C language API with two available wrappers that can interface with HCS and call it from higher-level languages. One wrapper is hcsshim, which is the basis for runhcs. The other is dotnet-computevirtualization, which is written in C#.

Virtual machines vs. Windows containers

VMs and containers are complementary but different technologies. A VM runs a complete OS, including its own kernel, which is why it requires more system resources, such as memory and storage. In contrast, containers build upon the host OS kernel and contain only apps, APIs and services running in user mode. As a result, they use fewer system resources.

Another difference is that it is possible to run any OS inside a VM, while containers necessarily run the same OS and OS version as the host. VMs also provide a stronger security boundary since the VM is completely isolated from the host OS and other VMs. Containers only provide lightweight isolation from the host and other containers. That said, the security of Windows containers can be increased by isolating each container in a lightweight VM using the Hyper-V isolation mode.

Other key differences between VMs and Windows containers include the following:

- Individual VMs can be deployed by using Windows Admin Center or Microsoft Hyper-V Manager, while individual containers can be deployed with Docker.

- Multiple VMs can be deployed using PowerShell or System Center Virtual Machine Manager. Multiple containers are best deployed using an orchestrator like AKS, which helps manage containers at scale and provides functionalities for workload scheduling, health monitoring, networking, service discovery and more.

- For local persistent storage, a single VM uses a virtual hard disk, while a single container node uses Azure Disks.

- Running VMs can be moved to other VMs in a failover cluster for load balancing. An orchestrator is required to start or stop containers in response to changes in load.

- VMs use virtual network adapters, while containers use an isolated view of a virtual network adapter and share the host's firewall with other containers.

Learn more about what Windows admins need to know about VMs and containers and explore virtualization problems and ways to solve them.