Microsoft Windows Server 2016

What is Windows Server 2016?

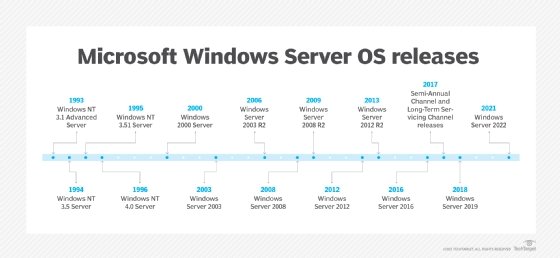

Microsoft Windows Server 2016 is Microsoft's server operating system (OS). It was specifically developed to serve as a platform for running networked applications. Windows Server 2016 was released for general availability on Oct. 12, 2016, and was developed concurrently with Windows 10. Mainstream support for Windows Server 2016 ended on Jan. 11, 2022.

Windows Server 2016 is part of the Windows NT family of OSes. Microsoft Windows Server OS is a series of enterprise-class server OSes designed to share services with multiple users, providing extensive administrative control of data storage, applications and corporate networks.

Microsoft Windows Server 2016 includes new features such as identity management and enhanced security capabilities designed to help organizations access data safely if stored locally, in the cloud or in a hybrid cloud. Microsoft Windows Server 2016 has had three successors, including Windows Server 2019, Windows Server 2022 and the Windows Server Semi-Annual Channel. Windows Server 2016 is the successor to Windows Server 2012 R2.

Notable features in Windows Server 2016

A highly anticipated feature of Windows Server 2016 was the inclusion of two native containers: Windows Server containers and Hyper-V containers. Windows Server containers run directly on the OS but are isolated from each other. Hyper-V containers provide better isolation and run from a Microsoft Hyper-V virtual machine (VM). Windows Server 2016 also provided built-in support for Docker.

Other new and updated features in Windows Server 2016 include the following:

- Nano Server is a lightweight installation option designed to run exclusively in cloud- and container-based scenarios. Nano Server does not include a graphical user interface or traditional .NET framework; administrators manage Nano Server instances remotely using PowerShell and Windows Management Instrumentation. There's also an updated module for building Nano Server images.

- Network Controller is a new server role that provides a centralized, programmable point of automation to configure, manage, monitor and troubleshoot virtual and physical network devices and services. Network Controller can be used with Microsoft System Center products, including Virtual Machine Manager, Operations Manager and PowerShell.

- Storage Quality of Service (QoS) provides a way to centrally monitor and manage storage performance for VMs and automatically improves storage resource fairness between multiple VMs using the same file server cluster.

- Storage Replica, a feature new to Server 2016, enables storage-agnostic, block-level synchronous replication between servers or clusters for disaster preparedness and recovery.

- Storage Spaces Direct enables highly available and scalable software-defined storage for servers in local storage. This simplifies the deployment and management of software-defined storage systems.

- Rolling upgrades for Hyper-V and scale-out file server clusters enable users to add a Windows Server 2016 node to a Hyper-V cluster with nodes running Windows Server 2012 R2.

- Hot add and remove of memory, officially known as Runtime Memory Resize, enables administrators to add or remove virtual memory and virtual network adapters while the VM is running.

- Just Enough Administration is a PowerShell toolkit that restricts users to only perform tasks for which they are authorized as part of their role.

- Encryption Supported mode offers more protection for default VMs. But it does not provide as much protection as Shielded mode.

- Identity and Access features include Active Directory Certificate Services, Active Directory Domain Services, Active Directory Federation Services, Management and Automation.

- Health Service helps to improve monitoring, operations and maintenance of cluster resources.

Is Windows Server 2016 still being supported?

The first technical preview of Windows Server 2016 was released on Oct. 1, 2014. A second and third preview were released in May and August 2015, respectively. Windows Server 2016 officially released on Oct. 12, 2016.

However, mainstream support for Windows Server 2016 ended on Jan. 11, 2022. This means that the OS only receives security updates, but no improvements or bug fixes. The extended support is set to end on Jan. 12, 2027. At this time, Windows Server 2016 will no longer receive improvements or bug fixes.

Windows Server 2016 minimum requirements

Windows Server 2016 requires the following hardware:

- a 1.4 GHz processor;

- 512 MB of RAM;

- 32 GB of disk space; and

- an Ethernet adapter capable of 1 Gbps throughput.

Requirements depend on the user's system configuration and the features they choose to install. Processor performance, for example, depends on the clock frequency of the processor and the number of processor cores and cache.

What's the difference between the Standard and Datacenter editions?

Windows Server 2016 offers different editions, two of which are the Standard edition and Datacenter edition. Both editions share many of the same features, but the Datacenter edition is tailored to organizations with heavier workloads, larger virtual infrastructures and more IT requirements. Although the Datacenter edition lacks many of the features associated with the Standard edition, it also provides some extra features that are not included in the Standard edition.

For example, in the Datacenter edition:

- Users can create an unlimited number of VMs, but only one Hyper-V host is available per license.

- Users can create an unlimited number of Windows and Hyper-V containers.

- There are no limitations as to where a server needs to be hosted. Servers can be either hosts or guests.

- Software-defined networking, a feature not available in the Standard edition, enables users to configure and manage their physical and virtual network devices centrally.

- Shielded Virtual Machines, a feature also available in the Standard edition, helps provide secure boot, trusted platform modules and disk encryption.

- A network controller enables users to simplify management, configuration and monitoring of their network infrastructure.

Learn more about the features added in the newest version of the Windows Server OS, Windows Server 2022.